Everything You Need To Know About The Face-Swap Technology That's Sweeping The Internet

In the past couple of months, "deepfake" has gone from a nonsense word to a widely-used synonym for videos in which one person's face is digitally grafted onto another person's body. The most popular — and troubling — type of deepfake is artificially produced porn appearing to star famous actresses like Gal Gadot, Daisy Ridley and Scarlett Johansson. Sites like Reddit and Pornhub have made moves to ban pornographic deepfakes in recent days, but it's never been easier for anyone with an internet connection to make disturbingly real-looking porn by mapping almost anyone's face over those of porn performers. Here's what you need to know.

'Deepfake' Celebrity Porn First Emerged In December

In an only somewhat hyperbolically titled article called "AI-Assisted Fake Porn Is Here and We're All Fucked," Motherboard's Samantha Cole interviewed the first Redditor to post convincing face-swapped videos, who called himself "deepfakes." ("Deepfake" which has since become a term used the doctored videos produced by the technology.) "Deepfakes" explained how he created a porn video appearing to star Gal Gadot.

According to deepfakes — who declined to give his identity to me to avoid public scrutiny — the software is based on multiple open-source libraries, like Keras with TensorFlow backend. To compile the celebrities' faces, deepfakes said he used Google image search, stock photos, and YouTube videos. Deep learning consists of networks of interconnected nodes that autonomously run computations on input data. In this case, he trained the algorithm on porn videos and Gal Gadot's face. After enough of this "training," the nodes arrange themselves to complete a particular task, like convincingly manipulating video on the fly…

"I just found a clever way to do face-swap," he said, referring to his algorithm. "With hundreds of face images, I can easily generate millions of distorted images to train the network," he said. "After that if I feed the network someone else's face, the network will think it's just another distorted image and try to make it look like the training face."

Soon, Someone Built An App That Made It Easy For Just About Anyone To Create Deepfakes

Deepfaking was democratized within weeks of Motherboard's first report about it, as another Redditor created an app that made it possible for any motivated person to create bespoke fake porn.

After the first Motherboard story, the user created their own subreddit, which amassed more than 91,000 subscribers. Another Reddit user called deepfakeapp has also released a tool called FakeApp, which allows anyone to download the AI software and use it themselves, given the correct hardware…

According to FakeApp's user guide, the software is built on top of TensorFlow. Google employees have pioneered similar work using TensorFlow with slightly different setups and subject matter, training algorithms to generate images from scratch.

[Quartz]

The Law Doesn't Have Many Existing Solutions For People Victimized By Face-Swapped Porn

Legally speaking, people whose faces have been inserted into porn without their consent don't have many clear protections.

Face-swap porn may be deeply, personally humiliating for the people whose likeness is used, but it's technically not a privacy issue. That's because, unlike a nude photo filched from the cloud, this kind of material is bogus. You can't sue someone for exposing the intimate details of your life when it's not your life they're exposing.

And it's the very artifice involved in these videos that provides enormous legal cover for their creators… Since US privacy laws don't apply, taking these videos down could be considered censorship—after all, this is "art" that redditors have crafted, even if it's unseemly.

[Wired]

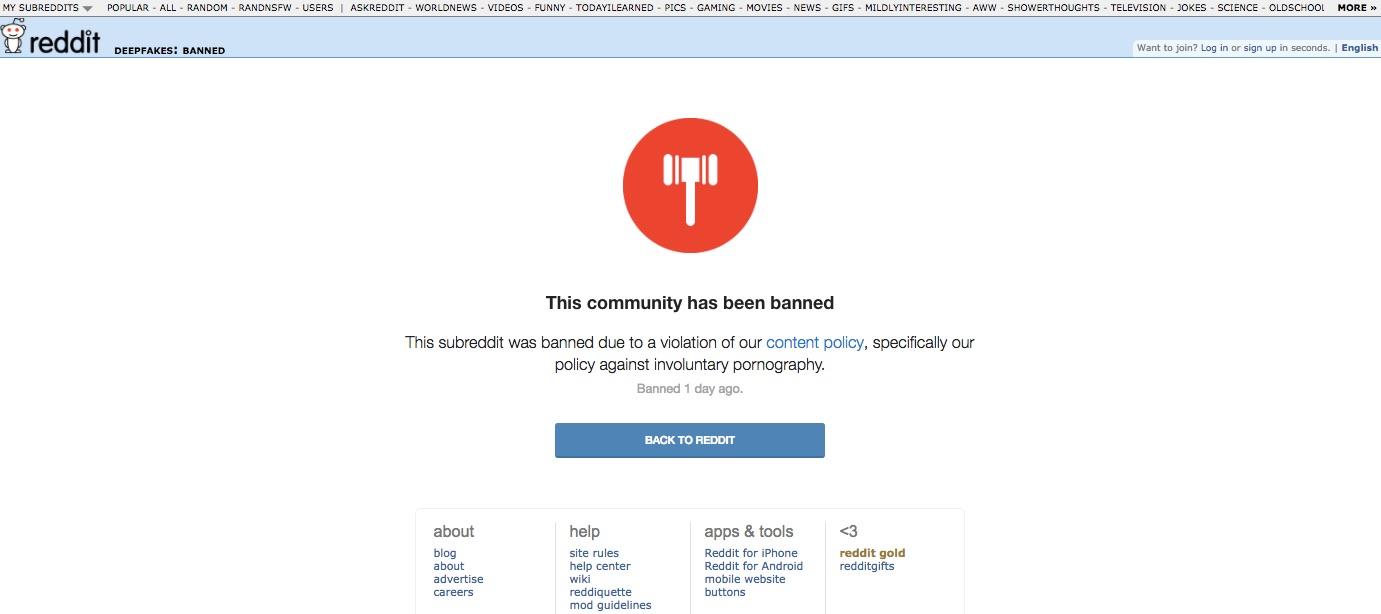

Reddit And Pornhub Have Recently Banned Face-Swapped Porn

As the deepfake scourge has spread, many sites have banned it, including Twitter, the chat service Discord and the image hosting platform Gfycat. This week, Reddit changed its terms of service to ban non-consensually faked porn images.

"We want to let you know that we have made some updates to our site-wide rules against involuntary pornography and sexual or suggestive content involving minors. These policies were previously combined in a single rule; they will now be broken out into two distinct ones," Reddit said in a post on its site Wednesday.

The website said it "prohibits the dissemination of images or video depicting any person in a state of nudity or engaged in any act of sexual conduct apparently created or posted without their permission, including depictions that have been faked."

[CNBC]

And Pornhub, the major free porn site, also announced this week that it considers deepfakes "nonconsensual content" that violate its terms of service.

"We do not tolerate any nonconsensual content on the site and we remove all said content as soon as we are made aware of it," a spokesperson told me in an email. "Nonconsensual content directly violates our TOS [terms of service] and consists of content such as revenge porn, deepfakes or anything published without a person's consent or permission." Pornhub previously told Mashable that it has removed deepfakes that are flagged by users.

However, a Motherboard reporter was able to find dozens of deepfakes on the Pornhub even after their announcement.

Face-Swapping Technology Could Potentially Have Some Non-Evil Purposes

Deepfakers aren't all misogynistic pervs — some of them have used the technology for benign purposes like casting Nicolas Cage as James Bond and convincingly erasing Henry Cavill's mustache:

Dr. Louis-Philippe Morency, director of the MultiComp Lab at Carnegie Mellon University, gave Mashable a couple of examples of potentially socially beneficial uses of face-swapping technology.

One moonshot example: Dr. Morency said soldiers suffering from post-traumatic stress disorder could eventually video-conference with doctors using similar technology. An individual could face-swap with a generic model without sacrificing the ability to convey his or her emotions. In theory, this would encourage people to get treatment who might otherwise be deterred by a perceived stigma, and the quality of their treatment wouldn't suffer due to a doctor being unable to read their facial cues.

Another one of Dr. Morency's possibilities — and its own can of worms — would be to use models in video interviews to remove gender or racial bias when hiring. But for any of this to happen, researchers need more data, and open-source, accessible programs like FakeApp can help create that data.

[Mashable]